Hence, the efficiency of the sales process increases. Automating this task gives sales teams more time for approaching the prospects. This process usually involves extracting contact information like the phone number, email address, and contact name for a given list of websites. Other companies accelerate their sales process by using web scraping for lead generation. This is an excellent example of how a seemingly “useless” single piece of information can become valuable when compared to a larger quantity. The result data enabled the client to identify trends about the product’s popularity in different markets. A client approached me to scrape product review data for an extensive list of products from several e-commerce websites, including the rating, location of the reviewer, and the review text for each submitted review. Investment firms were primarily focused on gathering alternative data, like product reviews, price information, or social media posts to underpin their financial investments. That is exactly what web scraping is all about for me: extracting and normalizing valuable pieces of information from a website to fuel another value-driving business process.ĭuring this time, I saw companies use web scraping for all sorts of use cases. I was amazed to see how many data extractions, aggregation, and enrichment tasks are still done manually although they easily could be automated with just a few lines of code.

In the past, I have worked for many companies as a data consultant. Now, we have to extract the recipe in the HTML of the website and convert it to a machine-readable format like JSON or XML.

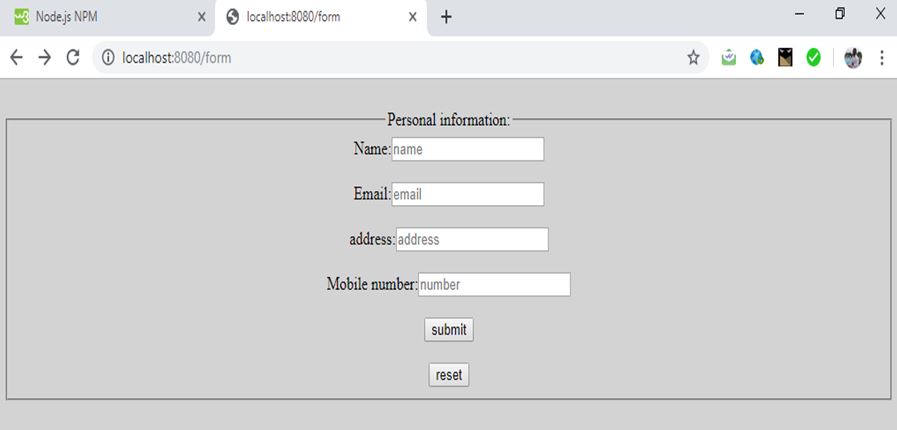

This step is like opening the page in your web browser when scraping manually. We first have to download the page as a whole. Sticking to our previous “noodle dish” example, this process usually involves two steps:

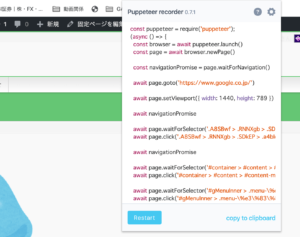

When using this term in the software industry, we usually refer to the automation of this manual task by using a piece of software. Hence, if you copy and paste a recipe of your favorite noodle dish from the internet to your personal notebook, you are performing web scraping. It merely describes the process of extracting information from a website. All of us use web scraping in our everyday lives. Let’s start with a little section on what web scraping actually means. In this tutorial, we will build a web scraper that can scrape dynamic websites based on Node.js and Puppeteer. However, when it comes to dynamic websites, a headless browser sometimes becomes indispensable. For a lot of web scraping tasks, an HTTP client is enough to extract a page’s data.

0 kommentar(er)

0 kommentar(er)